Site indexing: basic principles. How, why and why

Basic principles of site indexing

Author: Webakula

Learn all the subtleties of site indexing and how you can speed up indexing.

Why do you need a website? So that people get the information they need. But there are many resources on the web, and people rarely go beyond the first two pages of the search. Accordingly, for the user to see exactly your resource, it must be at the top of the issue. This task is solved by promotion in search engines.

And indexing is an important part of the promotion, which involves reading information from the resource, processing it with search algorithms, and placing the processing results in the search engine database.

The indexing process can be improved. That is, to pack the information in such a way that it is better absorbed by the PS.

Site Indexing: Getting Started

Indexing will not start until the search engine robot learns about a new resource in its "possessions". You can inform him by registering the resource in the necessary search engines using the links: webmaster.yandex.ru/addurl.xml and google.com/webmasters/tools/submit-url?hl=ru for Yandex and Google, respectively.

Indexing will not start until the search engine robot learns about a new resource in its "possessions". You can inform him by registering the resource in the necessary search engines using the links: webmaster.yandex.ru/addurl.xml and google.com/webmasters/tools/submit-url?hl=ru for Yandex and Google, respectively.

Or you can give a link from an already registered site to a new one.

Registering directly on the PS ensures that indexing will happen faster.

Indexing Frequency: Ability to Speed Up

Billions of web addresses from the Yandex database cannot be indexed every day. Accordingly, there is a certain frequency of indexing.

Billions of web addresses from the Yandex database cannot be indexed every day. Accordingly, there is a certain frequency of indexing.

But the frequency of indexing is not constant. Therefore, it turns out that changes are constantly monitored on some resources, while others are practically not visited by search robots.

You can reduce the indexing interval, which will allow you to effectively promote the site. After all, any change will promptly lead to the desired result.

How to ensure efficiency of indexing? You should know what factors affect it:

- Hosting quality: the busier the server that stores your site, the less often the PS robot will visit it. After all, because of the download, technical information is transmitted slowly.

- Resource update frequency: consider that the robot is “interesting” in visiting places where changes are constantly taking place. And if there are no updates, then regular indexing is not worth waiting for. However, keep in mind that it is pages with updates that will be indexed. Therefore, it is better to supplement the main page with a news block, and the dynamics of the entire resource is provided.

- Attendance, behavioral factors: if people come to the resource, linger, subscribe, and so on, then such a site will automatically be “in sight” of the PS, and the intervals between indexing will be reduced.

It is important that each of these factors can be influenced by making the resource “hospitable” for robots from PS.

Checking site indexing: basic methods

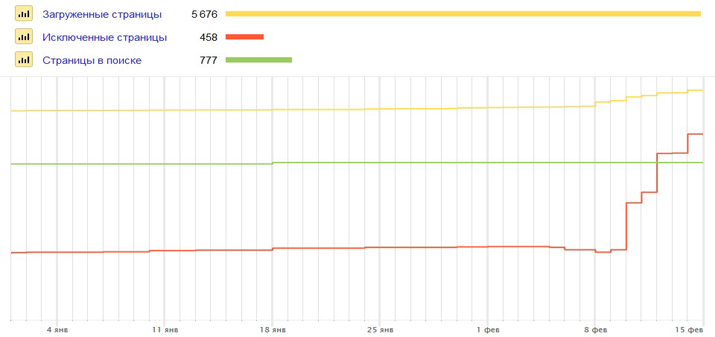

How to track the effectiveness of your actions? To do this, you need to know when the indexing took place. Verify it with certain resources.

How to track the effectiveness of your actions? To do this, you need to know when the indexing took place. Verify it with certain resources.

For example, on Yandex.Webmaster - in the URL check section, you will find out when the indexing took place, why the page is not in the search, or what document the user sees in the PS. It also provides recommendations for speeding up indexing.

Checking is also carried out using sait:. To do this, enter sait: moi sait.ru into the search bar and get all the indexed pages as a list.

You can also check URL indexing using SE Ranking. There is a tool here that allows you to follow a given URL in the search. Thanks to him, they track whether the required page is shown to users and whether it is in the search at all.

PS robot control rules

Information from the site is downloaded according to the algorithm - and no liberties are allowed here. And the plan is located exactly on the resource, and nothing else. It turns out that it is you who tell the robot what to download and what not.

Information from the site is downloaded according to the algorithm - and no liberties are allowed here. And the plan is located exactly on the resource, and nothing else. It turns out that it is you who tell the robot what to download and what not.

For example, robots should never be allowed to check customers' personal information. For this, a special file is provided that indicates those pages that are not indexed.

Use the Disallow:/admin line so that pages starting with admin are not viewed, and the Disallow:/images line will prohibit images from being indexed.

If you specify the sitemap address in robots.txt, you will allow the robot to view all pages where there is no indexing ban.

But often mistakes are made in robots.txt. The most common of these is an erroneous prohibition of indexing.

It can also be:

- Incorrect HTTP response code. If it differs from 200, then robots.txt may not be taken into account.

- If the entry contains Cyrillic, the file will be ignored. The site URL, written in Cyrillic letters, is transmitted in Unicode;

- Exceeding the size - the file must be no more than 32 kb.

Simple landings do not need it. If your resource needs it, then check the correctness on a special Yandex page - webmaster.yandex.ru/robots.xml. You need to upload the file, and all errors will "appear" automatically.

Working with sitemap

This is a file that specifies which pages are being indexed. Here you can use special tags to control indexing. yes, tag

This is a file that specifies which pages are being indexed. Here you can use special tags to control indexing. yes, tag

allows you to specify a priority page, and specifies the refresh rate.

The sitemap file must be hosted on your resource. There should be no critical errors here.

The correctness of the creation of this site can also be checked in Yandex -webmaster.yandex.ru/sitemaptest.xml.